Capgemini, GSK & IBM

Enabling Quantum Chemistry for Drug Discovery with Haiqu

The pharmaceutical industry seeks drugs with high potency and precise selectivity to improve efficacy, reduce side effects, and lower late-stage failure rates. Targeted covalent drugs are especially promising because they form a specific, irreversible bond with a target protein via a reactive chemical group called a warhead. When properly tuned, this mechanism delivers exceptional potency and durability—aspirin being one of the earliest examples. The challenge is predicting warhead reactivity: higher reactivity generally improves potency, but excessive reactivity undermines selectivity. Accurately balancing this trade-off remains a major bottleneck in drug discovery.

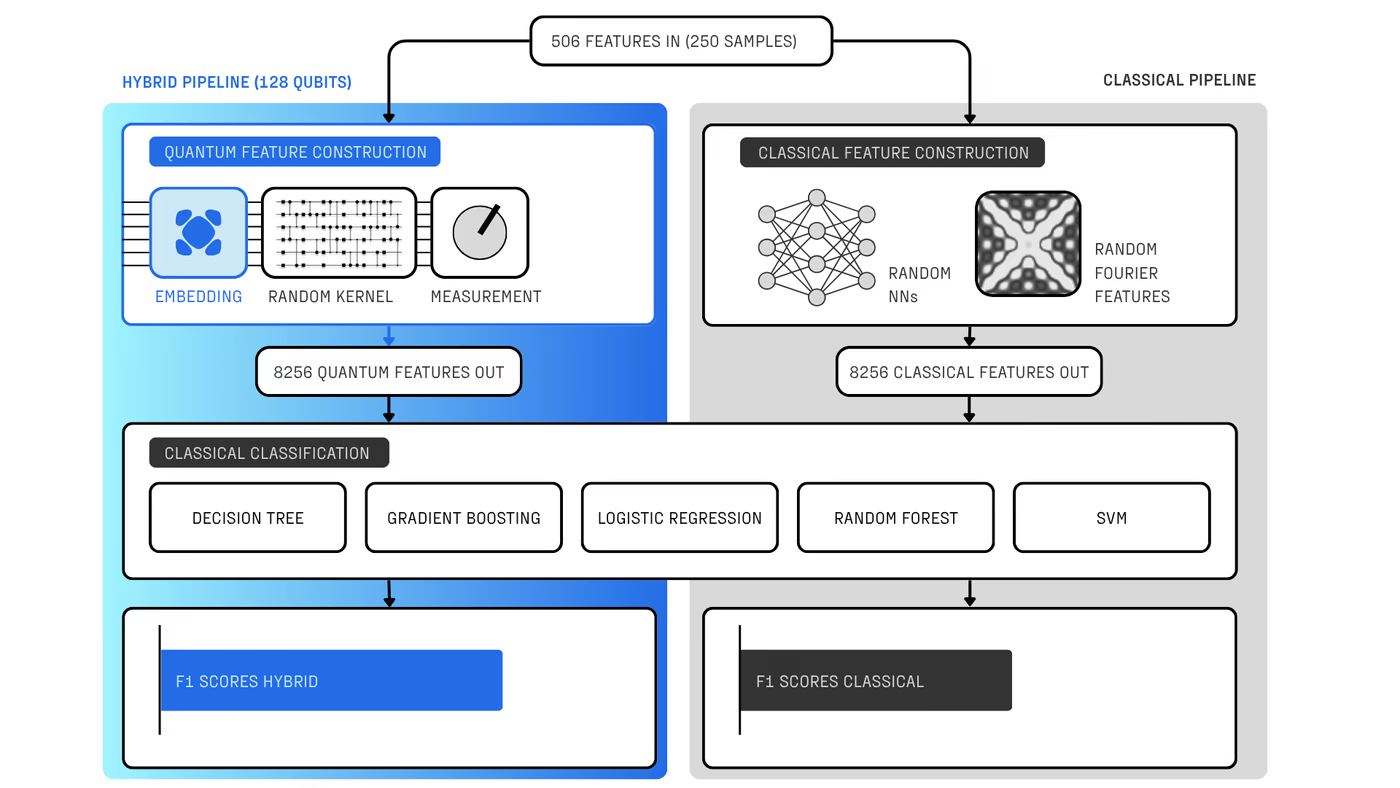

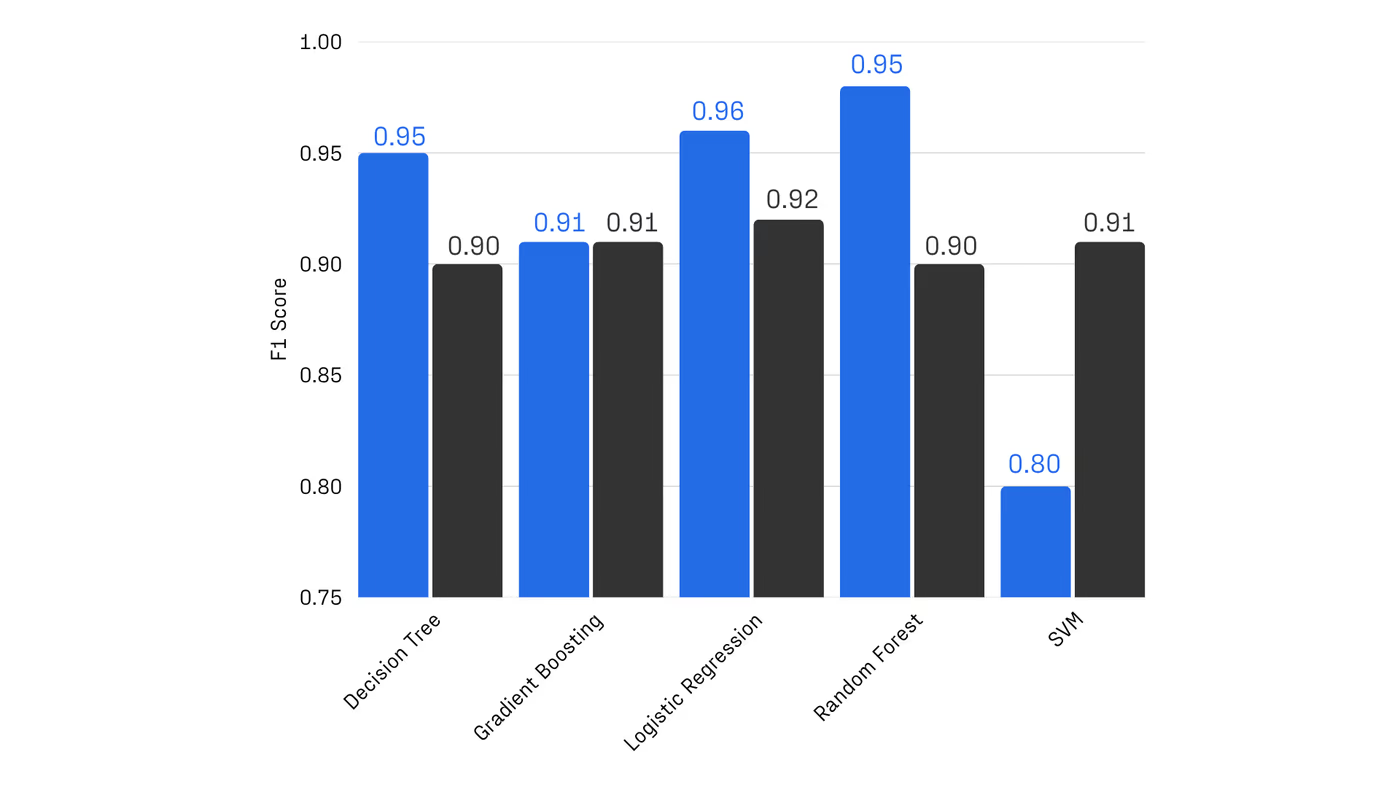

With R&D costs exceeding $2B per drug, pharma increasingly combines machine learning and computational chemistry to accelerate discovery. A powerful approach uses first-principles calculations to generate “quantum fingerprints”—physically grounded features that improve reactivity prediction. However, classical simulation methods scale poorly: they rely on approximations that are either too inaccurate to capture critical many-body effects or too expensive for practical screening.

Problem: Quantum chemistry workloads exceed today’s hardware limits in circuit depth, noise, and cost.

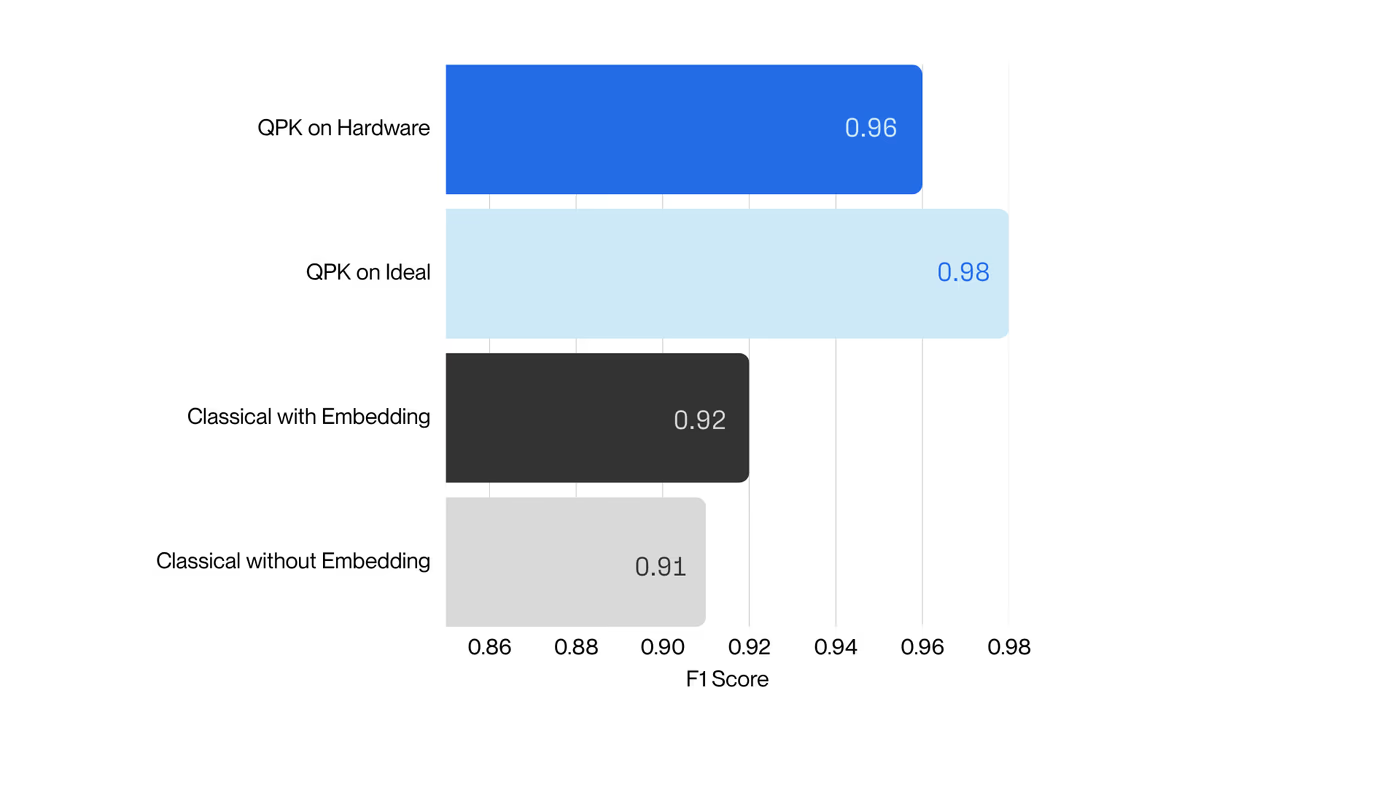

Quantum computing offers a solution, but until now has been constrained by hardware noise, limiting usable circuit depth to a few hundred two-qubit gates. Haiqu, working with Capgemini, IBM, and GSK, broke this barrier by demonstrating one of the largest electronic-structure Hamiltonian simulations ever run on real quantum hardware for covalent drug warheads. Using advanced circuit compression and middleware execution, the team initially reduced circuit depth by 15.5× and further allowed end-to-end execution by running sub-circuits up to 371 gates.

Solution: Decomposed prohibitive quantum runs into hardware-friendly, separable blocks.

Collectively, these results establish a scalable, hardware-realistic path for running Hamiltonian simulations on larger active spaces, while maintaining sufficient accuracy for molecular reactivity prediction.

Impact: With Haiqu, chemists build expertise ahead of broader hardware advances

For decision makers, the implications are clear and immediate: Haiqu dramatically lowers the cost and increases the performance of quantum chemistry workloads, transforming quantum computing from a long-term theoretical research bet into a near-term commercial piloting program on real quantum hardware. By making deep Hamiltonian simulations feasible on today’s quantum hardware, Haiqu enables pharmaceutical teams to:

- Explore larger and more realistic molecular spaces

- Generate predictive quantum features unavailable to classical methods

- Integrate quantum simulations directly into machine-learning-driven discovery pipelines

- Accelerate early-stage drug discovery while reducing computational cost and increasing the success of the pilot experiments

Crucially, this is not a promise for the next decade. Haiqu makes high-value quantum workloads commercially viable today, allowing enterprises to capture competitive advantage years ahead of hardware-only roadmaps.

Quantum for business. Run more with Haiqu.